Home > Learning Center > Round Trip Time (RTT)

Article's content

Round trip time (rtt), what is round trip time.

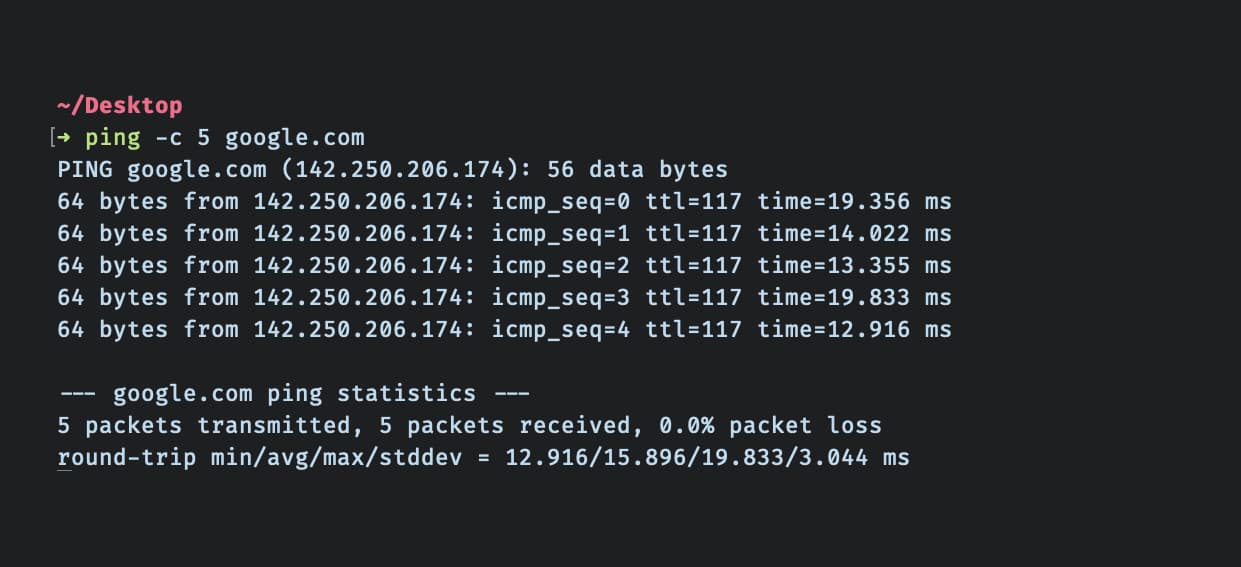

Round-trip time (RTT) is the duration, measured in milliseconds, from when a browser sends a request to when it receives a response from a server. It’s a key performance metric for web applications and one of the main factors, along with Time to First Byte (TTFB), when measuring page load time and network latency .

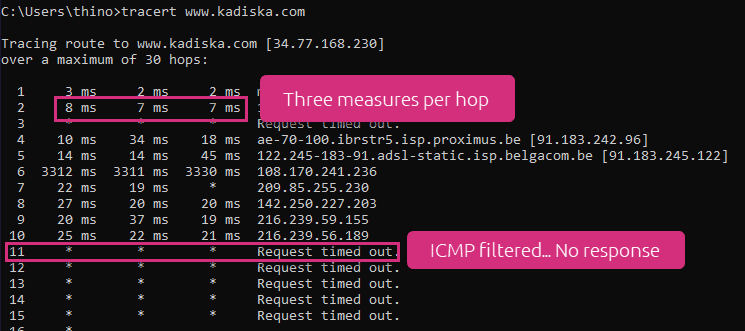

Using a Ping to Measure Round Trip Time

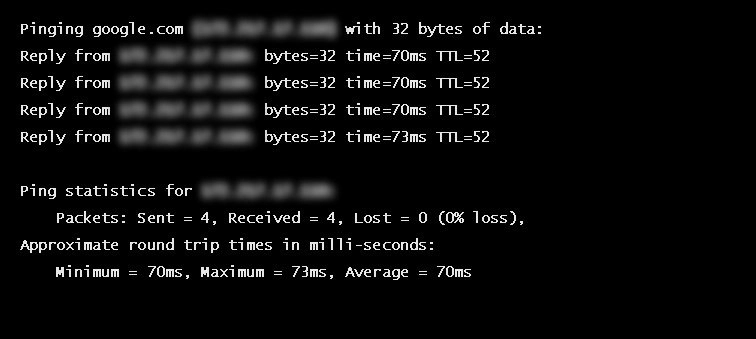

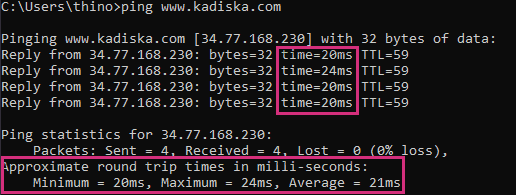

RTT is typically measured using a ping — a command-line tool that bounces a request off a server and calculates the time taken to reach a user device. Actual RTT may be higher than that measured by the ping due to server throttling and network congestion.

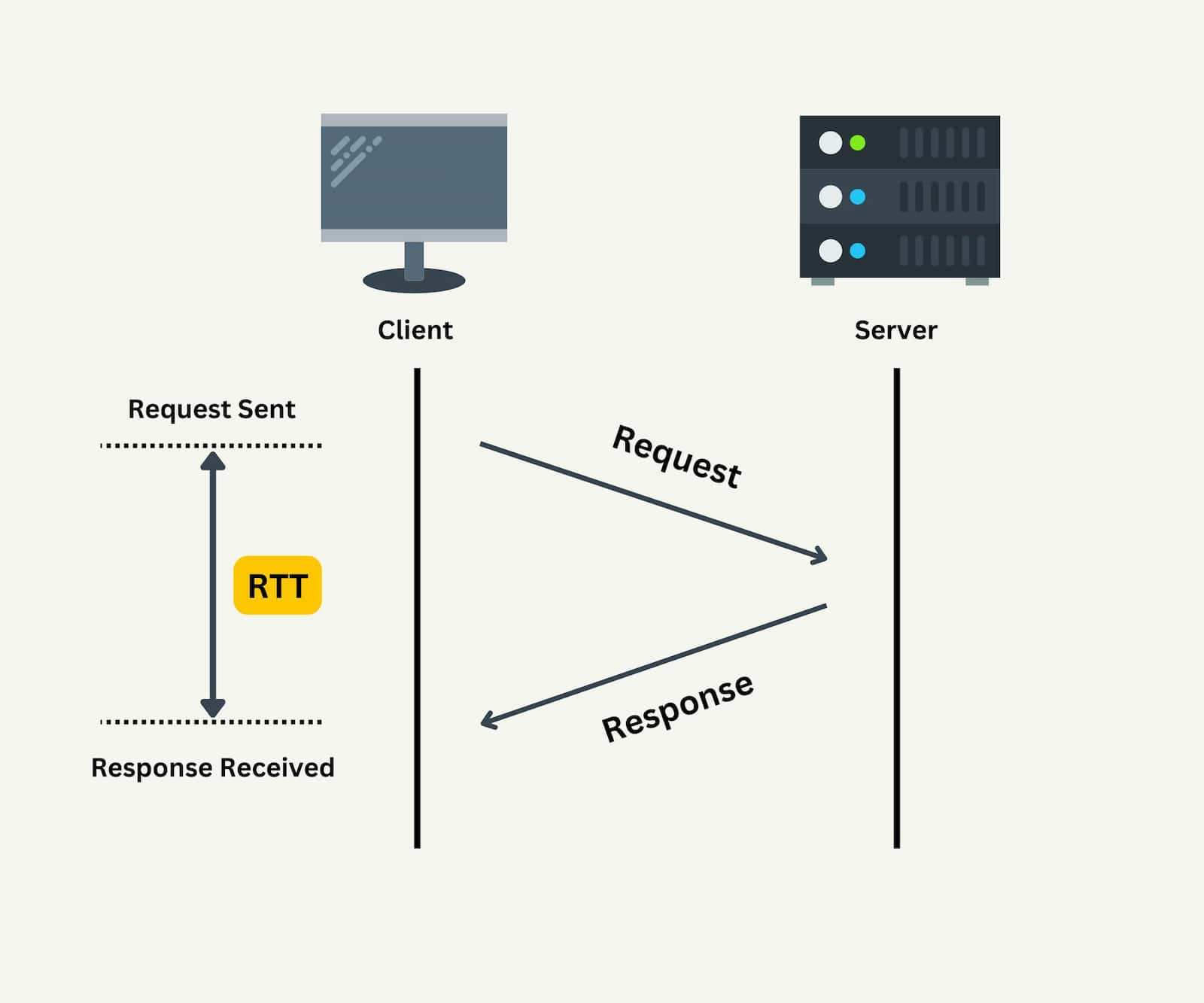

Example of a ping to google.com

Factors Influencing RTT

Actual round trip time can be influenced by:

- Distance – The length a signal has to travel correlates with the time taken for a request to reach a server and a response to reach a browser.

- Transmission medium – The medium used to route a signal (e.g., copper wire, fiber optic cables) can impact how quickly a request is received by a server and routed back to a user.

- Number of network hops – Intermediate routers or servers take time to process a signal, increasing RTT. The more hops a signal has to travel through, the higher the RTT.

- Traffic levels – RTT typically increases when a network is congested with high levels of traffic. Conversely, low traffic times can result in decreased RTT.

- Server response time – The time taken for a target server to respond to a request depends on its processing capacity, the number of requests being handled and the nature of the request (i.e., how much server-side work is required). A longer server response time increases RTT.

See how Imperva CDN can help you with website performance.

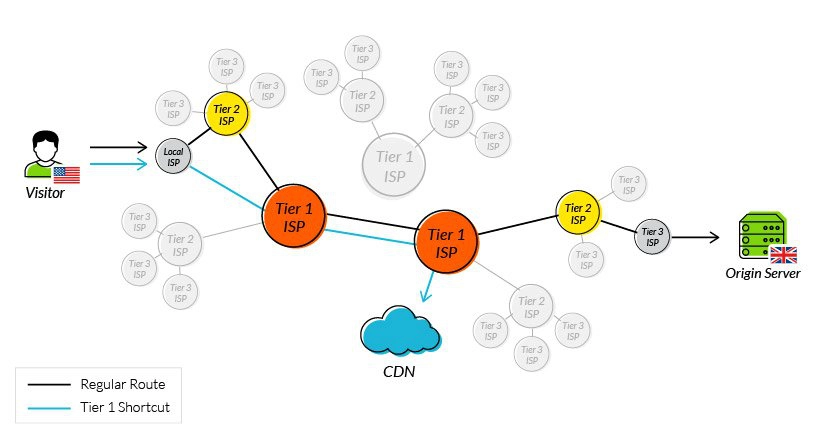

Reducing RTT Using a CDN

A CDN is a network of strategically placed servers, each holding a copy of a website’s content. It’s able to address the factors influencing RTT in the following ways:

- Points of Presence (PoPs) – A CDN maintains a network of geographically dispersed PoPs—data centers, each containing cached copies of site content, which are responsible for communicating with site visitors in their vicinity. They reduce the distance a signal has to travel and the number of network hops needed to reach a server.

- Web caching – A CDN caches HTML, media, and even dynamically generated content on a PoP in a user’s geographical vicinity. In many cases, a user’s request can be addressed by a local PoP and does not need to travel to an origin server, thereby reducing RTT.

- Load distribution – During high traffic times, CDNs route requests through backup servers with lower network congestion, speeding up server response time and reducing RTT.

- Scalability – A CDN service operates in the cloud, enabling high scalability and the ability to process a near limitless number of user requests. This eliminates the possibility of server side bottlenecks.

Using tier 1 access to reduce network hops

One of the original issues CDNs were designed to solve was how to reduce round trip time. By addressing the points outlined above, they have been largely successful, and it’s now reasonable to expect a decrease in your RTT of 50% or more after onboarding a CDN service.

Latest Blogs

- Imperva Threat Research

Gabi Sharadin

, Muly Levy

Jul 23, 2024 2 min read

Erez Hasson

, Lynn Marks

Jul 1, 2024 3 min read

Jun 20, 2024 4 min read

May 17, 2024 5 min read

Grainne McKeever

May 8, 2024 3 min read

Apr 16, 2024 4 min read

Mar 13, 2024 2 min read

Mar 4, 2024 3 min read

Latest Articles

- Network Management

176.5k Views

157.5k Views

113.4k Views

104.6k Views

103.2k Views

61.6k Views

56.4k Views

The DDoS Threat Landscape 2024

111% increase in DDoS attacks mitigated by Imperva

2024 Bad Bot Report

Bad bots now represent almost one-third of all internet traffic

The State of API Security in 2024

Learn about the current API threat landscape and the key security insights for 2024

Protect Against Business Logic Abuse

Identify key capabilities to prevent attacks targeting your business logic

The State of Security Within eCommerce in 2022

Learn how automated threats and API attacks on retailers are increasing

Prevoty is now part of the Imperva Runtime Protection

Protection against zero-day attacks

No tuning, highly-accurate out-of-the-box

Effective against OWASP top 10 vulnerabilities

An Imperva security specialist will contact you shortly.

Top 3 US Retailer

What is Round Trip Time - RTT vs TTFB vs Latency

Round Trip Time (RTT) is a metric that measures the time taken by a network packet to travel from sender to receiver and then back from receiver to sender.

RTT = Latency (Client to Server) + Server Processing Time + Latency (Server to Client)

RTT is a great metric to evaluate network performance but not the best at measuring how well the website is served to the end users. TTFB is a better metric for understanding server performance. Nevertheless, RTT directly influences TTFB and hence we must ensure that our website has a low RTT.

Here's a quick illustration of Round Trip Time.

In the above illustration, you can see that the time period between the Request sent from the client to the Response received by the client is the Round Trip Time. Apart from the latency, it might also include a little server processing delay.

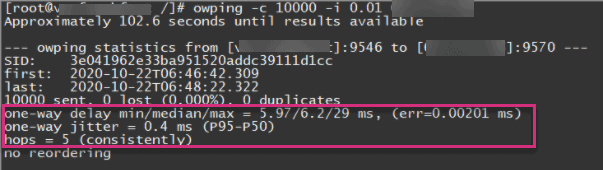

When measuring RTT, we ideally send a request such as ping that involves minimal processing and resources. This ensures that we can minimize the server processing and our RTT value mainly comprises of latency at both ends.

How to Measure Round Trip Time

To measure Round Trip Time, you can either open Terminal on Mac or Linux or open Command Prompt/Powershell on Windows. Use the ping command for any domain name or IP.

Here's an example showing the ping command for google.com.

You can use -c to limit the number of requests you want to send. Once the process is done, you'll get a summary of the RTT. It will list the minimum, average, maximum, and standard deviation values for the RTT. The farther you are from the physical server (or CDN's point of presence), the higher will be the RTT value.

What Factors Affect Round Trip Time

1) physical distance .

The longer the physical distance between the client and the server, the higher will be RTT. This applies to latency and TTFB as well.

2) Network Traffic

If any part of the network is congested due to high usage, there will be delays as the packets have to wait in a queue.

3) Network Hops Count

The more routers or servers a packet has to travel through, the higher will be the RTT. This is because each device will take some time to process it.

4) Server Response Time

Since Round Trip Time consists of the time when a request is sent by the client and the time when it is received back by the client, it adds some delay as the server needs to process the request in order to respond back. While measuring RTT, we try to minimize this time by using a simple request such as ping. However, for more complex requests, the server processing time is high and adds to the RTT value.

5) Transmission Medium

Optical Fiber offers the lowest RTT followed by other Wired connections such as Copper Cables. Wireless networks such as Wi-Fi, 5G, 4G, and Satellite Internet such as Starlink will have higher RTT.

6) Routing Paths

If the packet takes a more complex path over the internet, it could lead to a higher RTT. Some CDN Providers offer services that optimize the network paths.

7) Packet Size

The lower the size of the packet, the faster it can be transmitted.

RTT vs TTFB vs Latency

It is easy to get confused between these three as they all are very similar. To understand their difference better, let's start with the easiest one, i.e., latency.

Latency is only a one-way delay. It is the time taken by a network packet to travel from sender to receiver (or from receiver to sender).

RTT (Round Trip Time) is the two-way delay. It is the time taken by a network packet to travel from sender to receiver and then back to sender from the receiver.

TTFB (Time to First Byte) is a metric that is quite similar to Round Trip Time but it is measured for an HTTP request and includes the server processing delay along with the round trip time. SpeedVitals also offers a TTFB Test that you could check out.

RTT & Web Performance

Round Trip Time is an important metric for Web Performance as it directly influences TTFB which in turn affects various Web Vitals including Largest Contentful Paint (LCP) and Interaction to Next Paint (INP). Let us discuss how RTT affects Core Web Vitals and how to measure RTT for real users.

Impact on Core Web Vitals

Time to First Byte (TTFB) metric consists of Round Trip Time as well as Server Processing Time. It plays an important in the calculation of First Contentful Paint (FCP) and Largest Contentful Paint (LCP). Even if your website has a fast backend/server and your Frontend is well-optimized, you can still have a poor LCP score if the Round Trip Time is high.

Since a high RTT value increases TTFB, this results in a delay until all the critical resources are loaded and the website becomes interactive. This can also result in slower event handling and higher input latency. All of these factors can increase INP (Interaction to Next Paint), which is a new Core Web Vitals metric.

The only metric RTT doesn't have a direct impact on is Cumulative Layout Shift (CLS). Nevertheless, RTT has a direct impact on 2/3rd of Core Web Vitals.

We also have a free tool to check out your Core Web Vitals score. You can check it out on Core Web Vitals Checker .

Check RTT using CrUX API

The Chrome User Experience Report (CrUX) has recently started including the RTT values. You can learn more about the same on the metrics page of CrUX API Documentation .

However, this RTT value is measured for an HTTP request and not for a simpler request like ping. This would be ideal in the context of Web Performance but it could differ from measuring RTT via ping, and also because it is being measured for real users.

How to Reduce Round Trip Time

Our article on reducing TTFB covers both aspects (Reducing Server Response Time and latency). Some of the suggestions in that article will be applicable to reducing RTT as well.

Here are some suggestions that can help reduce the RTT of your website.

1) Use a CDN

As discussed earlier, using a CDN can dramatically reduce both RTT and TTFB as it makes copies of your website resources in multiple locations. When a user requests your website, the CDN serves the user through its closest Point of Presence.

You can refer to our article to find out the best CDN .

2) Consider using a Load Balancer

A load balancer can distribute the traffic to your origin server to multiple nodes and helps prevent network congestion during traffic spikes. Moreover, having multiple servers across the globe can reduce network delay as the content gets served from the node closest to the user (in case of no CDN, dynamic content, or Cache Miss).

3) Optimize Network Paths

Some CDNs offer features or add-on services that can optimize network paths by smart routine. Some of the popular services include:

- Cloudflare's Argo Smart Routing

- Fastly's Dynamic Site Acceleration

- Akamai's Adaptive Media Delivery

- How to Measure Round Trip Time

- 1) Physical Distance

- 2) Network Traffic

- 3) Network Hops Count

- 4) Server Response Time

- 5) Transmission Medium

- 6) Routing Paths

- 7) Packet Size

- RTT vs TTFB vs Latency

- Impact on Core Web Vitals

- Check RTT using CrUX API

- 1) Use a CDN

- 2) Consider using a Load Balancer

- 3) Optimize Network Paths

- Skip to primary navigation

- Skip to main content

- Skip to footer

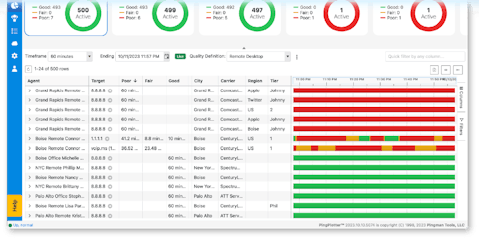

Cyara Customer Experience Assurance Platform

Blog / CX Assurance

December 12, 2023

What is Round-trip Time and How Does it Relate to Network Latency?

Tsahi Levent-Levi, Senior Director, Product

Round-trip time (RTT) is an important metric that can indicate the quality of communications available between two end-points. It’s a metric that our team often discusses with customers because it directly relates to the service quality experienced. RTT can be impacted by a range of design decisions, especially concerning network topology. However, there is some confusion around what exactly RTT is, how it relates to latency, how it can impact your service, and how you can improve it.

What is Round-trip Time?

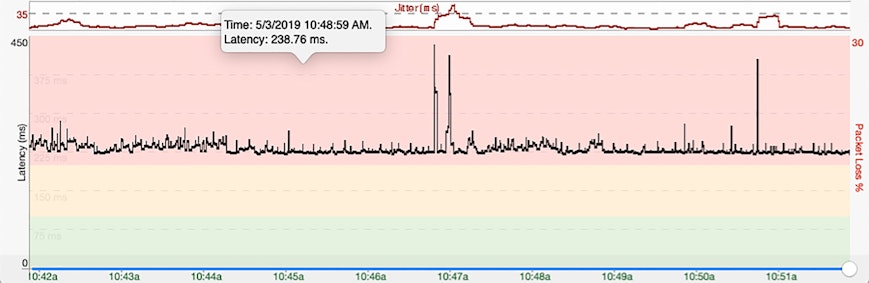

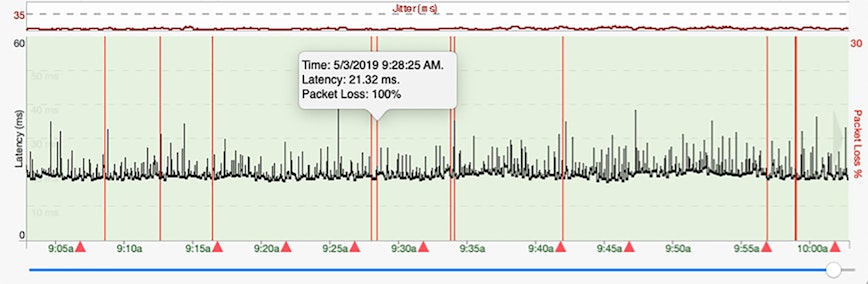

One of our most viewed dashboard metrics in our Cyara testRTC product suite is RTT. This is the time it takes for a packet to go from the sending endpoint to the receiving endpoint and back. There are many factors that affect RTT, including propagation delay, processing delay, queuing delay, and encoding delay. These factors are generally constant for a given pair of communicating endpoints. Additionally, network congestion can add a dynamic component to RTT.

Propagation delay is the network distance between the two endpoints. It is the route taken by the data across the various networks, through different network switches and routers to get from the sending endpoint to the receiving endpoint. Sometimes, this may be aligned with geographical distances and sometimes it may not. Propagation delay is usually the dominant component in RTT. It ranges from a few milliseconds to hundreds of milliseconds, depending on whether the endpoints are separated by just a few kilometers or by an entire ocean.

The remaining components (processing, queuing, and encoding delays) can vary by the number of nodes in the network connecting endpoints. When only a few router hops separate the endpoints, these factors are insignificant. However, the more hops, the higher the delay, since each network node needs to receive, process and route all the data towards the next hop, adding its own milliseconds of delay to the total RTT calculation.

Impact of Network Topology

In real-time communications, we must consider the impact of network topology on RTT. Any infrastructure-based topology introduces incremental delays when compared with a peer-to-peer connection. When media is anchored by a multipoint control unit MCU , SFU , or TURN server, additional processing, queuing and encoding delays occur. But, more importantly, an infrastructure topology can add significant propagation delay depending on where the server is located relative to the endpoints.

Figure 1: Infrastructure Topology

Hairpinning occurs when media is anchored in a location that is geographically remote from an endpoint, this adds significant propagation delay, when compared to a peer connection. This is why the placement of infrastructure can be critical to delivering low RTT and a high-quality user experience. The further the media server is from the sending and receiving endpoints, the higher the RTT value and the lower the service quality.

Figure 2: The media server is located further away than necessary from the sending and receiving endpoints, resulting in a high round-trip time.

Figure 3: The media server is located between the sending and receiving endpoints, resulting in a lower round-trip time.

Clearing Up a Few Misconceptions

RTT and ping time are often considered synonymous. But while ping time may provide a good estimate of RTT, it is different. This is because most ping tests are executed within the transport protocol using internet control messaging protocol (ICMP) packets. In contrast, RTT is measured at the application layer and includes the additional processing delay produced by higher level protocols and applications (e.g. HTTPS). In WebRTC, RTT on the media streams is calculated by looking at the secure real-time transport protocol (SRTP) packets themselves. This provides the closest measure to what the actual media in a session feels like in terms of RTT.

Network latency is closely related, but different from RTT. Latency is the time it takes for a packet to go from the sending endpoint to the receiving endpoint. Many factors affect the latency of a service, including:

- Network congestion

- Packet loss and jitter

- Traffic prioritization

- Server load

- Codecs and encryption

Therefore, latency is not explicitly equal to half of RTT, because delays may be asymmetrical between any two given endpoints. For example, RTT includes processing delay at the echoing endpoint.

How Does RTT Affect Your Real-time Communications Service?

As a rule of thumb, the lower the RTT, the higher the media quality for that session is. Our focus is on ensuring the delivery of live, highly interactive services and conversations. Doing that requires a low delay from the time a user speaks until the intended recipients hear the spoken words.

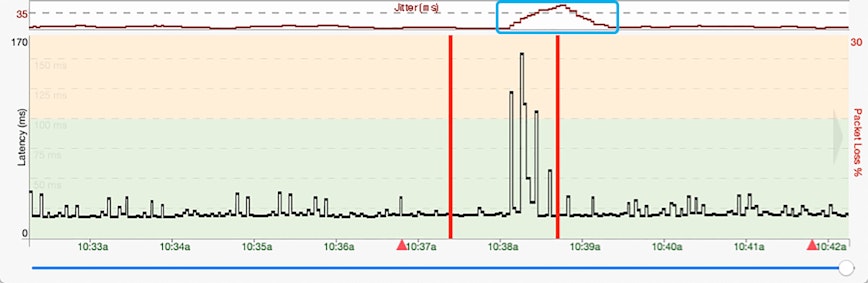

At Cyara, we’ve made RTT a central focus in all of our WebRTC services. We ensure it is available to you in both aggregate form (in highlight dashboards) as well as in drill down analysis charts where you can analyze RTT over time.

Read more about: Cyara testRTC , Latency , Round-Trip Time (RTT) , Web Real-Time Communication (WebRTC) , WebRTC Monitoring

Subscribe for Updates

Join our email list, and be among the first to learn about new product features, upcoming events, and innovations in AI-led CX transformation.

Written by Vasilena Markova • August 21, 2024 • 12:56 pm • Internet

Round-Trip Time (RTT): What It Is and Why It Matters

Round-Trip Time (RTT) is a fundamental metric in the context of network performance, measuring the time it takes for data packets to complete a round trip from source to destination and back. Often expressed in milliseconds (ms), RTT serves as a critical indicator for evaluating the efficiency and reliability of network connections. In today’s article, we dive into the concept of RTT, exploring how it works, why it matters in our digital lives, the factors that influence it, and strategies to enhance it. Whether you’re a casual internet user seeking a smoother online experience or a network administrator aiming to optimize your digital infrastructure, understanding this metric is critical in today’s interconnected world.

Table of Contents

What is Round-Trip Time (RTT)?

Round-Trip Time is a network performance metric representing the time it takes for a data packet to travel from the source to the destination and back to the source. It is often measured in milliseconds (ms) and is a crucial parameter for determining the quality and efficiency of network connections.

To understand the concept of RTT, imagine sending a letter to a friend through the postal service. The time it takes for the letter to reach your friend and for your friend to send a reply back to you forms the Round-Trip Time for your communication. Similarly, in computer networks, data packets are like those letters, and RTT represents the time it takes for them to complete a round trip.

How Does it Work?

The concept of RTT can be best understood by considering the journey of data packets across a network. When you request information from a web server, for example, your device sends out a data packet holding your request. This packet travels through various network devices in between, such as routers and switches, before reaching the destination server. Once the server processes your request and prepares a response, it sends a data packet back to your device.

Round-Trip Time is determined by the time it takes for this data packet to travel from your device to the server (the outbound trip) and then back from the server to your device (the inbound trip). The total RTT is the sum of these two one-way trips.

Let’s break down the journey of a data packet into several steps so you can better understand the RTT:

- Sending the Packet: You initiate an action on your device that requires data transmission. For example, this could be sending an email, loading a webpage, or making a video call.

- Packet Travel: The data packet travels from your device to a server, typically passing through multiple network nodes and routers along the way. These middle points play a significant role in determining the RTT.

- Processing Time: The server receives the packet, processes the request, and sends a response back to your device. This processing time at both ends also contributes to the Round-Trip Time.

- Return Journey: The response packet makes its way back to your device through the same network infrastructure, facing potential delays on the route.

- Calculation: It is calculated by adding up the time taken for the packet to travel from your device to the server (the outbound trip) and the time it takes for the response to return (the inbound trip).

Experience Industry-Leading DNS Speed with ClouDNS!

Ready for ultra-fast DNS service? Click to register and see the difference!

Why does it matter?

At first look, Round-Trip Time (RTT) might seem like technical terminology, but its importance extends to various aspects of our digital lives. It matters for many reasons, which include the following:

- User Experience

For everyday internet users, RTT influences the sensed speed and responsiveness of online activities. Low Round-Trip Time values lead to a seamless experience, while high RTT can result in frustrating delays and lag during tasks like video streaming, online gaming, or live chats.

- Network Efficiency

Network administrators and service providers closely monitor RTT to assess network performance and troubleshoot issues. By identifying bottlenecks and areas with high RTT, they can optimize their infrastructure for better efficiency.

- Real-Time Applications

Applications that rely on real-time data transmission, such as VoIP calls, video conferencing, and online gaming, are highly sensitive to RTT. Low RTT is crucial for smooth, interruption-free interactions.

In cybersecurity, Round-Trip Time plays a role in detecting network anomalies and potential threats. Unusually high RTT values can be a sign of malicious activity or network congestion.

Tools for Measuring RTT

Measuring Round-Trip Time is essential for understanding network performance. Two of the most commonly used tools are Ping and Traceroute. Here’s how to use them:

- Ping command

The Ping command is a fundamental tool available on most operating systems. It measures RTT by sending Internet Control Message Protocol (ICMP) echo requests to a specified IP address. It calculates the time it takes for a packet to travel to the target and back, providing the RTT in milliseconds. This tool is valuable for basic network diagnostics, helping you identify if a host is reachable and how long it takes to communicate with it. You can easily use it by following these steps:

- Open Command Prompt (Windows) or Terminal (macOS/Linux).

- Type “ping” and the target domain or IP address. Here is an example: ping domain.com

- Press Enter.

The output will display the RTT in milliseconds for each packet sent.

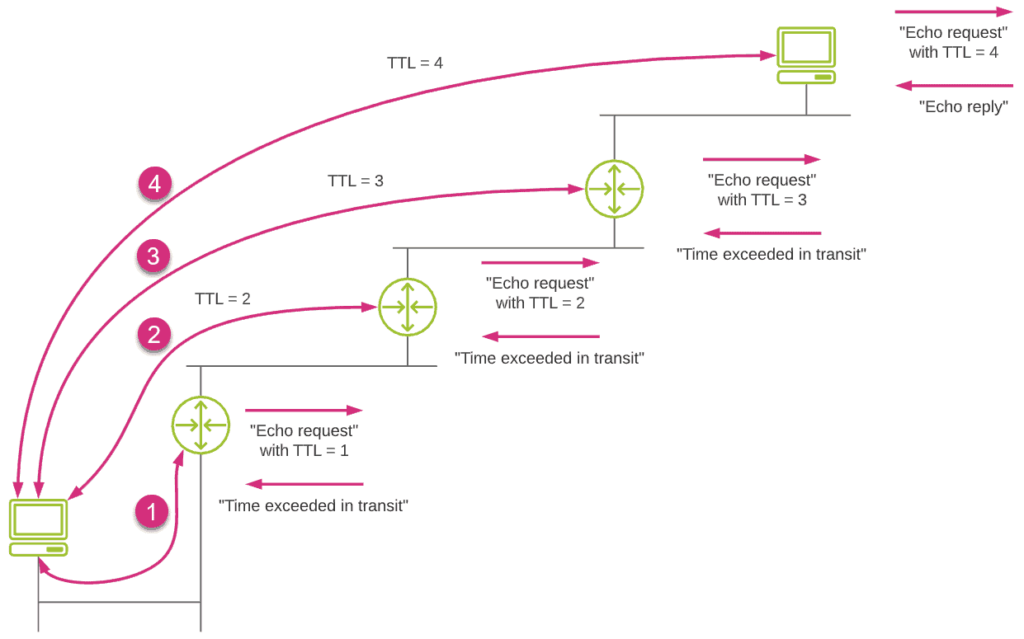

- Traceroute/Tracert

Traceroute (or Tracert in Windows) is a diagnostic tool that goes beyond just measuring RTT. It traces the entire path a packet takes to reach its destination, displaying each hop along the route and the RTT to each router. That way, it helps identify where delays or packet loss occur in the network, making it a crucial tool for finding issues in complex networks. To utilize it, follow the steps below:

- Type “tracert” (Windows) or “traceroute” (macOS/Linux) followed by the target domain or IP address. Here is an illustration: traceroute domain.com

The output will show the RTT for each hop along the route to the destination.

Factors Affecting Round-Trip Time (RTT)

Several factors can influence the metric, both positively and negatively. Therefore, understanding these factors is crucial, and it could be very beneficial for optimizing network performance:

- Distance: The physical distance between the source and destination plays a significant role. Longer distances result in higher RTT due to the time it takes for data to travel the network.

- Network Congestion: When a network experiences high volumes of traffic or congestion, data packets may be delayed as they wait for their turn to be processed. As a result, it can lead to packet delays and increased RTT.

- Routing: The path a packet takes through the network can significantly affect RTT. Efficient routing algorithms can reduce the time, while not-so-optimal routing choices can increase it.

- Packet Loss: Packet loss during transmission can occur due to various reasons, such as network errors or congestion. When lost, packets need to be retransmitted, which can seriously affect the Round-Trip Time.

- Transmission Medium: It is a critical factor influencing RTT, and its characteristics can vary widely based on the specific medium being used. Fiber optic cables generally offer low RTT due to the speed of light in the medium and low signal loss. In contrast, wireless mediums can introduce variable delays depending on environmental factors and network conditions.

How to improve it?

Improving Round-Trip Time (RTT) is a critical goal for network administrators and service providers looking to enhance user experiences and optimize their digital operations. While some factors affecting it are beyond our control, there are strategies and practices to optimize Round-Trip Time for a smoother online experience:

- Optimize Routing: Network administrators can optimize routing to reduce the number of hops data packets take to reach their destination. This can be achieved through efficient routing protocols and load balancing .

- Optimize Network Infrastructure: For businesses, investing in efficient network infrastructure, including high-performance routers and switches, can reduce internal network delays and improve RTT.

- Upgrade Hardware and Software: Keeping networking equipment and software up-to-date ensures that you benefit from the latest technologies and optimizations that can decrease RTT.

- Implement Caching: Caching frequently requested data closer to end-users can dramatically reduce the need for data to travel long distances. The result really helps with lowering RTT.

- Monitor and Troubleshoot: Regularly monitor your network for signs of congestion or packet loss. If issues arise, take steps to troubleshoot and resolve them promptly.

Discover ClouDNS Monitoring service!

RTT vs Latency

RTT and latency are related but not identical metrics in networking. Latency is the time it takes for a packet to travel from the source to the destination, often referred to as a one-way delay. RTT, on the other hand, measures the total time it takes for a packet to go to the destination and for a response to come back to the source.

It is important to mention that RTT is not always exactly twice the latency. Factors such as network congestion, processing delays at routers, and asymmetrical routing can cause RTT to differ from simply doubling the latency. For example, if the route from source to destination is more congested or longer in one direction, the round-trip time might be significantly higher than twice the latency.

Round-Trip Time (RTT) is the silent force that shapes our online experiences. From the seamless loading of web pages to the quality of our video calls, RTT plays a pivotal role in ensuring that digital interactions happen at the speed of thought. As we continue to rely on the Internet for work, entertainment, and communication, understanding and optimizing this metric will be crucial for both end-users and network administrators. By reducing it through strategies, we can have a faster, more responsive digital world where our online activities are limited only by our imagination, not by lag.

Hello! My name is Vasilena Markova. I am a Marketing Specialist at ClouDNS. I have a Bachelor’s Degree in Business Economics and am studying for my Master’s Degree in Cybersecurity Management. As a digital marketing enthusiast, I enjoy writing and expressing my interests. I am passionate about sharing knowledge, tips, and tricks to help others build a secure online presence. My absolute favorite thing to do is to travel and explore different cultures!

Related Posts

What is ICMP (Internet Control Message Protocol)?

July 17, 2024 • DDoS , Internet , Protocols

The ICMP (Internet Control Message Protocol) is a network layer protocol and also a supporting protocol in the Internet protocol suite. It ...

Ping Traffic Monitoring: Ensuring Network Health and Efficiency

March 28, 2024 • Monitoring

In an era where digital connectivity is the lifeline of businesses and individuals alike, maintaining optimal network performance is more ...

Ping of Death (PoD) – What is it, and how does it work?

December 5, 2023 • DDoS , Protection

Ping of Death sounds pretty scary, and it can bring down your server and keep it that way for an extended period of time using a simple ...

DNS Troubleshooting – tools and commands

October 19, 2023 • Tools

In the vast universe of network management and IT troubleshooting, issues related to the Domain Name System (DNS) often crop up. DNS is a ...

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Recent Posts

- SMTP (Simple Mail Transfer Protocol) explained

- Botnet – what is it, and how does a Botnet attack work?

- Domain parking. What is it and why does it exist?

- What is SMTP Smuggling? How to detect and prevent it?

- What is Backup DNS?

- Cloud Computing

- DNS Records

- Domain names

- Load balancing

- SSL Certificates

- Uncategorized

- Web forwarding

- DNS Services

- Managed DNS

- Dynamic DNS

- Secondary DNS

- Reverse DNS

- DNS Failover

- Anycast DNS

- Email Forwarding

- Enterprise DNS

- Domain Names

- Monitor website traffic and optimize your user experience

- Evaluate which marketing channels are performing better

- Analyse aggregated data about usage of the website to understand our customers. Randomized identifiers shared with partners.

- Store your preferences from previous visits

- Collect user feedback to improve our website

- Evaluate your interests to provide you unique customised content and offers

- Make online and social advertising more relevant for you

- Invite specific customer groups to reconnect with our products later

- Share data with our advertising and social media partners via their third-party cookies to match your interests

Round Trip Time (RTT)

In the realm of network technology, there is a wealth of specialized terms and procedures. These elements pivotally support the smooth operation of the global Internet. Central among these is the concept of Round Trip Time (RTT). Understanding RTT is crucial for unraveling how data moves across networks, and its influence on the quality of online activities.

The term 'Round Trip Time', or RTT, denotes the timespan taken for a data unit to journey from its starting point to a specified destination and back. Simplistically put, it symbolizes the united timespan that includes signal transmission and the receipt of a responding signal. This counter-signal typically signifies successful acknowledgment of the original signal, dispatched from the destination back to the starting point.

RTT is a respected metric in network technology due to its profound impact on the efficiency and speed of data interchange. It serves as a 'heartbeat' of a network, determining critical attributes about its ongoing health and functional capacity.

Why RTT Matters

RTT matter extends beyond its capacity to measure time. It acts as a vital index of the performance of your network connection. A decreased RTT indicates speedy sending and reception of data packets, resulting in quick website content loading times, uninterrupted multimedia broadcasting, and reduced in-game network latency. Conversely, an increased RTT can result in Internet delays and a subsequent degraded user experience.

RTT: The Key Yardstick in Networking

RTT is pivotal in network technology as it is involved in multiple networking protocols like the Transmission Control Protocol (TCP) to direct the exchange of data packets amongst computer systems. For example, TCP uses RTT to specify the optimal waiting period for response receipt before it restarts data packet transmission. This approach minimizes repeated transmissions, thereby enhancing data buffering efficiency.

In conclusion, Round Trip Time (RTT) is a critical aspect of network technology. It measures how long a data chunk needs to travel from its origin to a selected spot and back. Familiarizing with this concept is vital for gaining insights into the workings of the Internet and strategies for boosting network performance. The subsequent sections delve deeper into RTT's intricacies, exploring its everyday implications for Internet usage, its significance in gaming, and its crucial role in network troubleshooting, among others.

Unraveling the Basics of RTT

RTT, acronym for Round Trip Time, underscores an imperative idea in the universe of networking. It specifically refers to the duration a chunk of data experiences during its travel from origin to endpoint, and returning back to the source. A comprehensive cognizance of this idea is instrumental in decoding the operational speed and dexterity of a network system.

Data Packet Transit: An Overview

A clear perception of RTT requires cognizance of the voyage undertaken by a data packet. When initiating a request online, such as prompting a hyperlink or sending an email, your device dispatches a chunk of data towards the server that hosts the desired website or emailing facility. This chunk of data, or packet, maneuvers through an array of routers and switches, each contributing a minor time incrementation to the overall journey. Upon the receipt of this packet, the server processes the request and generates a response that is directed back to your device. The complete duration taken for this travel constitutes the RTT.

Exploring RTT Components

RTT is an amalgamation of distinct elements. Initial element is propagation delay, reflecting the time consumption for a packet to move from origin towards its destination. The computation of this time duration takes into account the physical distance between the targeted points, aided by the pace of light within the medium, generally fiber optic cables.

The next element contributing to RTT is transmission delay, quantifying the duration for the data packet to gain physical entry onto the network. This duration is influenced by the packet size and the network's bandwidth capacity.

Additional elements include processing delay, highlighting the duration taken for a router or switch to handle the packet. This entails time taken for error identification and resolution, and routing.

The final ingredient in the RTT is queuing delay - the duration a packet spends in the queue awaiting processing by a router or switch, which is contingent upon the network's congestion status and the protocol-defined packet priority level.

RTT’s Function within TCP/IP

RTT acts as a significant clog in the mechanism of the Transmission Control Protocol/Internet Protocol (TCP/IP) - the basic internet protocol suite. TCP/IP utilizes RTT to ascertain the ideal window size favoring data upload and to formulate an accurate timeout duration for packets remaining unacknowledged.

Within TCP/IP, the Round Trip Time Estimator algorithm calculates the anticipated RTT (ERTT) grounded on the recently recorded RTT (MRTT) of previous packet transmissions. This mathematical computation follows the formula:

ERTT = (1 - α) ERTT + α MRTT

Here, α is a factor that oscillates between 0 and 1. The weightage of the recent RTT measurements is elevated in this formula, allowing the ERTT to adapt to the fluctuating network conditions.

Interplay of RTT and Ping

Measuring RTT is often conducted leveraging a tool such as ping. Activating ping directs a packet towards a defined destination, awaiting a desired response. The duration occurring between the dispatch and receipt of the packet equates to the RTT. Ping’s simplistic characteristics render it a capable instrument for RTT measurement and network issue identification.

In conclusion, RTT is a core evaluating factor for network efficiency determination. It encapsulates the duration experienced by a data packet from the moment of dispatch until its return journey. RTT plays an impactful role in the functionality of the TCP/IP protocol compliance. For those working alongside network or internet-centric technologies, an enriched understanding of RTT is crucial.

RTT in Daily Life: Everyday Examples

Our regular activities are intimately intertwined with a lesser-known concept, RTT (Round Trip Time). From emailing to gaming or video surfing, this underpinning principle becomes paramount in dictating your interaction's excellence. Let's illuminate this critical component with a few real-life illustrations.

Electronic Mail Exchange

Picture the journey of an email, fragmented into multiple data fragments, propelled across the internet stratosphere to land in the recipient's server. RTT fundamentally measures the span it takes for a single fragment to make a round trip between your system and the destination server. Elements of delay creep in with a high RTT, impacting the swiftness of your email delivery. Although this may appear inconsequential in standard exchanges, the ramifications are significant during time-critical correspondences.

Virtual Gameplay

In the virtual gaming universe, RTT becomes the invisible adjudicator of your experience. Every action you make, be it character motion or launching an attack, converts into data fragments moving towards the gaming server. This server digests this information before reciprocating a response. The chronology of this entire operation is termed RTT.

A low RTT manifests in a virtually instantaneous in-game reflection of your actions, creating a fluid, pleasurable gaming session. Conversely, a high RTT brings about an irritating delay or 'lag' between your maneuvers and in-game ramifications.

Digital Video Consumption

During a video playback, data fragments are in a constant relay from the content server to your gadget. RTT captures the timescale this fragment takes to make a return journey from the server to your device.

When RTT is minimal, you enjoy a smooth, non-stuttering video playback. But a high RTT gives rise to frequent halts due to buffering and a compromised video resolution, all because the data fragments are unable to match pace for a continuous video render.

Internet Surfing

Browsing the internet is essentially a practice of dispatching requests to respective web servers and receiving website data in return. RTT measures the timeframe for this exchange.

Reduced RTT ensures swift webpage rendering, enabling a fluid browsing experience. However, a high RTT translates to a sluggish loading speed, particularly noticeable when accessing data-intensive websites.

Therefore, RTT is an obscure key influencing our daily digital interactions. By grasping its function & impact, we can uncover the intricate mechanics empowering our online existence.

The Mathematics behind RTT: A Non-Complex Explanation

To parse the principles that are intertwined with Round Trip Time (RTT), one doesn't necessarily need to be a mathematician. Essentially, these principles are not beyond basic arithmetic and common sense. Here, we'll demystify the essentials.

Symbolic Representation

Think of RTT as a clock measuring the journey of a data packet as it moves from origin to destination and reintegrates into the origin. Its formulaic expression captures four distinct stages in this journey:

RTT = Journey Initiation Time + Travel Time + Waiting Time + Decoding Time

Here's a closer look at each of these phases:

- Journey Initiation Time: Envisage a highway with vehicles (data packages) rushing in at bursting speed. This time is essentially the span required for each vehicle to completely enter onto the highway. It's ascertained by the ratio of packet dimensions to the carrying capacity of the link.

- Travel Time: This second phase is about the transmigration of a single data fragment across the highway from the beginning to the endpoint. It's the ratio of the geographical gap between the two points to the speed of light in the transmission medium.

- Waiting Time: This is the time a data packet spends in line, waiting its turn to get processed. This duration can see significant fluctuations depending upon the pile-up on the highway.

- Decoding Time: It is the duration utilized by the routers and hosts to unravel the packet's identity from its header.

Sizing Implication on RTT

Packet or vehicle size plays a striking role in the dynamics of RTT. Higher dimensions lead to extended journey initiation and travel times, thus elevating RTT. Smaller sizes may pull down RTT but might simultaneously increase administrative load due to the swelling number of packets.

Bandwidth: A Key Player

Highway capacity or bandwidth can dramatically influence RTT. With more room to accommodate data, RTT drops. However, a greater bandwidth won't guarantee diminished RTT if traffic becomes overwhelming.

The Geographic Factor

The physical space between data sender and receiver fundamentally affects the travel time and hence the RTT. Expanded distances translate to increased RTT.

Network Traffic and RTT

The overall health of the highway, marked by scenarios like congestion and data loss, can substantially augment RTT. While congestion results in extended waiting duration, data loss necessitates re-dispatching of packets, thereby escalating RTT.

Let's emulate these ideas. Imagine a data unit of 1000 bytes in dimension, a highway of 1 Mbps bandwidth, a geographical gap of 1000 km between the sender and receiver, and light speed in medium measured at 200,000 km/s.

Deploying this input in the formula, we get:

- Journey Initiation Time = 1000 bytes * 8 bits/byte / 1 Mbps = 8 ms

- Travel Time = 1000 km / 200,000 km/s = 5 ms

- Considering negligible waiting and decoding times, the RTT equates to 8 ms + 5 ms = 13 ms.

This little illustration lights up our understanding of the rudimentary mathematics enmeshed within RTT. Mastering this knowledge can empower individuals to dissect the forces shaping RTT and how to manoeuvre it into achieving enhanced network output.

How RTT Impacts Your Internet Experience

As we venture into the digital wilderness, every device we use, be it a laptop, smartphone or a tablet, participates in a colossal information dance across the globe. This dance, which involves sending and receiving data, moulds the efficiency and smoothness of our online journey, with the Round Trip Time (RTT) operating as its intrinsic compass.

Decoding the Influence of RTT on Digital Experience

At the essence of RTT is the time an info-packet consumes to traverse from the initiation point (your gadget) to its terminus (the receiver server), and back. It’s a fundamental network barometer, intimately dictating the swiftness and reliability of your online connectivity.

Whenever you activate a weblink, dispatch an email or stream multimedia, your device is in a constant state of communication with the server that hosts the desired content. As the server returns the requested data, the clock ticks on this two-way journey - providing the RTT value.

A swift RTT translates into faster data transit, forging a slicker and fluid online journey. Conversely, a time-consuming RTT is responsible for frustrating hiccups such as buffering, lagging, and delays that could cripple your digital activities.

RTT’s Effect on Web Surfing

Think of the routine act of exploring a webpage. As you input a web address and press enter, your gadget shoots a request to locate and retrieve this webpage from its respective server. The server responds by sending the webpage details, which your browser converts into the visible screen content.

A time-intensive RTT slows down this relay race, potentially leading to delays and lag times. This becomes even more aggravating while navigating heavy-content platforms or multitasking across several tabs.

RTT’s Impact on Streaming Portals

Streaming platforms like Netflix, YouTube, and Spotify depend immensely on nimble RTTs to function seamlessly. As you enjoy a media file, your gadget is persistently soliciting additional data packets and receives them in return from the host server.

A sluggish RTT can trigger buffering lags as your device waits impatiently for data to land, placing unwanted interruptions in your streaming experience and causing potential dissatisfaction.

RTT and the Gaming Arena

Within the thrilling domain of online gaming, RTT could determine the victor and the fallen. Games mandate spontaneous interactions among players, and any communication delay can disturb the gaming rhythm.

A lethargic RTT time can instigate a lag – a noticeable and annoying lag between your gaming actions and the game’s reaction. In intense competitive gaming scenarios, every split second matters.

RTT and Virtual Meetings

In the contemporary remote work dynamics, video conferencing platforms such as Zoom and Microsoft Teams have become indispensable. Even these tools lean on efficient RTTs for uninterrupted, latency-free dialogues.

An elongated RTT may introduce delays in audio and visual inputs, potentially disrupting effective communication and causing potential confusion. This can have dire consequences in professional environments, where time is key, and clear dialogue is essential.

To manifest, RTT is an unsung hero shaping the quality of your digital interactions. A record low RTT time paves the way for a swift, efficient data relay, crafting a seamless online engagement. Contrarily, a slow RTT might introduce noticeable drags and disrupt your online immersion.

RTT in the World of Gaming: Making the Connection

In the digital sphere of gaming, each split-second is paramount. The deciding factor between triumph and defeat frequently rests on the pace and productivity of your network link speed. Here, the concept of Round Trip Time (RTT) becomes indispensable. Grasping the profound role of RTT can lead to maximizing your gaming prowess.

The Function of RTT in Virtual Gaming

When it comes to interactive gaming, RTT signifies the interval required for a data chunk to journey from your gaming unit (whether it's a console, desktop, or handheld device) towards the game host and return. This two-way journey is pivotal as it dictates the rapidity of your gaming response based on your controls and the maneuvers of other participants.

Take an example of you initiating a jump maneuver in the game, this instruction is relayed as a data chunk towards the game host. The host subsequently processes your instruction and reciprocates with a response, visualized on your interface. The entire time spent during this operation is your RTT.

RTT's Impact on Gameplay Quality

An optimized, low RTT translates to swift reaction times providing you an advantageous edge in adrenaline-pumping games where precision and speed hold the key. Conversely, a high RTT results in latency, prompting your game to respond lethargically.

This can be visualized using a first-person shooter game scenario. Sporting a high RTT, you take a shot at a rival but owing to the delay, the host logs your shot post the enemy's evasion, resulting in a miss. On the contrary, a minimal RTT records your maneuvers virtually instantaneously, providing you a realistic shot at the target.

RTT's Connection to Server Proximity

The geographical separation between your gaming equipment and the game host has substantial bearings on your RTT. Greater the distance to the host, the more time required for data chunks to complete their journey, culminating in a high RTT. It's no surprise that serious gaming enthusiasts gravitate towards hosts situated in close proximity.

RTT's Relationship with Network Traffic

Network traffic too has a pivotal role in defining your RTT. If your network is swarmed by numerous devices attempting simultaneous internet access, it curbs the data chunks' travel speed, resulting in an amplified RTT.

Quantifying RTT during Gaming

Almost all virtual games provide an avenue to verify your RTT or latency period. This is often illustrated in the game's configuration or flashed on the interface amid the gameplay. By vigilantly tracking your RTT, you can take corrective measures to reduce it when warranted, like shutting down bandwidth-consuming applications or opting for a proximal host.

In conclusion, RTT is of paramount significance in virtual gaming, wielding influence over your game's operational speed and your gaming session's quality. An awareness of RTT operations enables you to finely tune it, ensuring a competitive edge and enhancing your gaming enjoyment.

Network Diagnostics and RTT: A Deep Dive

Investigating network functions is pivotal for preserving and boosting the efficiency of a network. An instrumental index utilized in these probes is the Round Trip Time (RTT), which we'll expound on. We will dissect the interaction between RTT and network probes to offer a thorough insight into its value in resolving network complications and bolstering network operations.

Dissecting Network Probes

Network probes are an organized method applied to discover, isolate, and rectify issues connected to the network. They incorporate a succession of evaluations and verifications to study the network's functioning, pinpoint problem areas, and apply appropriate solutions. These evaluations could span from elementary ping evaluations to intricate scrutiny of network traffic.

Influence of RTT in Network Probes

RTT has a prominent role in network probes, delivering helpful data on the network functionality by calculating the duration needed for a data packet to journey from the origin to the endpoint and return. Extended periods for RTT regularly signify network pile-up, substantial latency, or alternative efficiency complications.

RTT and Efficient Network Operations

RTT is a reliable measure of network efficiency. A lesser RTT signifies an operational network with little latency, whereas an elevated RTT implies possible problems, for instance, network pile-ups or malfunctioning hardware. With RTT monitoring, network administrators can detect and tackle these issues swiftly, thus guaranteeing best network operations.

RTT and Network Problem-solving

RTT also aids considerably in network problem-solving. By comparing varying RTT values of separate network segments, operators can accurately locate a network issue. For example, repeated high RTT values in a specific segment could suggest an issue in that exact segment.

Instruments to Determine RTT

Several mechanisms can calculate RTT, each with their distinctive attributes and capabilities. A few often-used tools are:

- Ping : This basic command-line function dispatches an ICMP echo request to an assigned host and anticipates a response. The duration needed for the response to arrive is the RTT.

- Traceroute : This mechanism calculates the RTT needed for each step along the route from the origin to the endpoint. It aids in identifying the specific network segment responsible for high RTT rates.

- Network probes software : These all-encompassing tools offer real-time monitoring of network functionality, encompassing RTT. Examples of these tools consist of SolarWinds Network Performance Monitor and PRTG Network Monitor .

Techniques to Boost RTT

Boosting RTT is essential for improving network operations. Some of the strategies applied include:

- Load balancing : Apportioning network traffic across numerous servers can alleviate overload and lessen RTT.

- Quality of Service (QoS) : Giving priority to certain types of traffic can guarantee they encounter lower RTT.

- Route optimization : Selecting the shortest paths for data packet travels can minimize RTT.

In summary, RTT is a central measure in network probes. It offers crucial insights into network operations and assists in problem-solving. By accurately calculating and boosting RTT, operators can corroborate seamless and effective network operations.

Understanding Packet Travel and its Relation to RTT

The world of virtual transactions mandates a deep comprehension of the voyage adopted by data morsels. This signifies the path these morsels embark on while traversing from one endpoint to another via an internet grid, an intriguing journey, to say the least. This intricate path comprises numerous phases such as the encasing of data, broadcasting of signals, tracing the path, and ultimately unmasking at the intended endpoint. These phases attribute to the overall duration documented for a complete two-way trip (RTT) – a crucial metric of a network's operational competence.

Delineating the Expedition of Data Segmentations

Emphasizing the link between RTT and the motion of data segmentations involves an exploration of this voyage. As data disseminates over a grid, it disintegrates into minute parts labeled 'segmentations.' Each of these segmentations then obtains a protective coating of cardinal identifiers and trailing notes housing essentials about the origin, endpoint, and the correct order of the segmentation.

Initiated next is the travel over the grid for these fortified segmentations. The blueprint of the grid, coupled with the tangible stretch between the initiator and the receiver, outlines the quantity of routers and toggles the segmentations navigate through. Every device on the course scrutinizes the segmentation's identifiers to pinpoint the optimal pathway to the final destination.

On arrival at their destination, these segmentations shed their protective casings, and the introductory message undergoes reconstitution. The transmission process is announced successful if all segmentations arrive in the correct sequence, free of any anomalies.

How Segmentations Movement Influences RTT

The Round Trip Time (RTT) mirrors the comprehensive duration a data segmentation spends journeying from its origin, reaching the receiver, and retracing its path. It offers an insight into network delay or latency. An escalated stretch covered by the segmentation amplifies the RTT, subsequently depreciating the network’s efficiency.

A variety of elements could sway RTT during the segmentation's voyage:

- Dispatch Span: The duration expended in freeing the segmentation from its source to the endpoint. It is reliant on the segmentation's volume and the network's bandwidth. Vast segmentations and meager bandwidths culminate in extended dispatch spans and an escalated RTT.

- Signal Span: The essential duration for a signal to transit from the initiator to the receiver. It leans heavily on the geographical expanse and signal propagation speed. Extensive distances and slower velocities can trigger extended signal spans and an increased RTT.

- Computation Span: The duration a router or toggle takes to process each segmentation. The intricacy of the routing operation and the machine's efficacy show direct correlation. Complicated routes and inefficient machines inject lengthier computing spans, thereby escalating RTTs.

- Queue Span: The waiting duration a segmentation endures in a queue before getting addressed by a router or toggle. It pivots on network traffic. Overburdened networks result in lengthened queue spans and thus, higher RTTs.

Grasping these dynamics empowers network custodians to employ strategies to better segmentation traffic and minimize RTT, hence escalating network competence.

Drawing Parallels: Segmentations' Movement and RTT

The following matrix distinguishes the connection between segmentations' movement and RTT:

These findings reveal that each facet influencing segmentation movement correlates to its impact on RTT. Hence, enhancing segmentations' motion is fundamental in curbing RTT, thereby augmenting network efficiency.

To encapsulate, acquiring thorough knowledge of segmentation advancement and its connection with RTT is indispensable for those tasked with refining network movement or efficiency. Focusing on the factors that influence both segmentations' movement and RTT can enable proactive steps towards enhancing network operations, ensuring a seamless and efficient data exchange process.

RTT in Relation to Network Speed and Efficiency

Network performance and user satisfaction are paramount in the networking arena, highly dependent on two prime parameters: pace and proficiency. One pivotal component shaping these features is the Round Trip Time (RTT).

Unveiling Direct Impact of RTT on Network Pace

Essentially, RTT measures the time it needs for a data chunk to voyage from its origin, reach the destination, and return. The length of this tour mirrors the pace of your network. Lower RTT is synonymous with faster networks, hinting that data chunks are making their journeys quicker than usual. Conversely, elevated RTT levels correspond to languid networks - data chunks take an unusually long time to complete their round trips.

Here's a deciphered table to comprehend the essence:

Thus, Connection X, having the shortest RTT, exceeds in speed, while Connection Z, carrying the heaviest RTT, lags behind.

Unraveling Indirect Impact of RTT on Network Proficiency

RTT not only sets the pace but also molds the proficiency of a network. How effectively a network utilizes its resources to relay data chunks from origin to destination is its proficiency. Elevated RTT levels can stretch the waiting time for acknowledgement of the data chunks sent. Throughout this span, the sender stays dormant, sending no fresh data chunks, causing a gap in network potential. Such scenarios frequently arise in protocols like TCP relying on acknowledgments to steer data flow.

However, a contracted RTT lets the sender grab the acknowledgements quicker, permitting more data chunk transmission in the same span. This maximizes network potential, therefore enhancing proficiency.

Below is a clearer depiction:

In the end, RTT stands as a crucial determinant of the pace and proficiency of a network. Networks with lower RTTs race towards speed and proficiency, while those with higher RTTs lag. Hence, decoding and controlling RTT sits at the core of network performance enhancement.

Latency vs RTT: Essential Differences and Comparisons

Untangling the Web: Probing Network Functionality with a Focus on Delay and Complete Cycle Time (CCT)

Delay and Complete Cycle Time (CCT) are elemental factors that chiefly shape the efficacy of a network’s operation. While they are frequently interconnected, each presents separate facets of network data migration .

Clarifying Network Delay

Visualize network delay as a digital sand timer counting down fractions of a second. It shows the span needed for a data packet to travel from its initial source to its finishing destination within a network — a lightning-fast sprint from point A to point B, with the distance gauged in milliseconds (ms).

Factors such as the physical gap between the sender and the receiver nodes, the mode of data delivery (be it copper wires, fiber threads, or airwaves), along with the integration of multiple network components can affect this interval.

In-depth Analysis of Complete Cycle Time (CCT)

Conversely, CCT represents a full roundtrip — picture it as driving from your abode (point of departure), touring a botanical garden (final stop), and then heading back home (starting point). This accounts for the complete time investment needed for the roundtrip plus the waiting period at the stopover, along with the return to the onset. The CCT is proportional to the delay for the timing reflected in milliseconds (ms).

Separating Delay from CCT

These temporal facets are significant in detecting anomalies in network tasks. Typically, the swiftness of a network gets represented by delay, whereas the responsiveness gets determined by the CCT. Occasionally, a network might exhibit low delay, yet necessitate an extended period to wrap up a full cycle due to hold-ups and response lags at endpoints (high CCT).

Link between Delay and CCT

It’s vital to realize that CCT will invariably be on par with or surpass the delay. The CCT calculates the span consumed by data packets during a return trip, inclusive of surplus data handling time.

In theory, in a flawless network, the CCT would amount to twice the delay. However, practical complications like network interference, route hurdles, and traffic in network equipment generally cause the CCT to exceed twofold the span of the one-way journey.

Fundamentally, delay and CCT, while interconnected, divulge exclusive details for assessing network productivity. A keen comprehension of these disparities can markedly hone one’s proficiency in handling network-related intricacies with superior accuracy and productivity.

How to Measure RTT Accurately for Optimal Performance

The efficiency of web infrastructures is inherently determined by the accurate evaluation of its Data Turnaround Time (DATT), a crucial criterion used by IT specialists to detect and mend underperforming networks, enhance network output, and ensure a seamless working environment for end-users.

Constructing a Procedure for DATT Calculation

Observing DATT necessitates a rigorous analysis of the entire course a data unit navigates, from inception to termination. Given this task typically, transmission procedures such as ICMP (Inter-Network Communication Evaluation System) or TCP (Transmission Regulation System) are deployed. The major approaches used to scrutinize DATT include:

1. Echo Command : Among the prevalent techniques for measuring DATT is the 'echo' command. This sequence dispatches an ICMP echo plea to a predetermined site, and the infrastructure anticipates a reply. The duration taken to get this reply establishes the DATT value. Implement the echo command as such:

The outcome displays the DATT in milliseconds (ms).

2. RouteTrack Command : A viable addition to Echo, the 'routetrack' command lets you peep into the track a data unit covers and separately displays the DATT for each lap of its journey. Execute the routetrack command like this:

This will dissect the individual DATTs for each leg of the data unit's journey.

Undetectable Factors that Might Misinterpret DATT Calculations

Unrecognizable variables that may misrepresent DATT evaluations encompass:

- Surge in Network Consumption : An abrupt leap in network usage may lead to data unit stacking, thereby extending the DATT.

- Geographical Extent : The physical distance separating the source and the endpoint can augment DATTs due to extended transfer periods.

- Device Potency : The sufficiency and robustness of the tools used to create and gather data can also distort DATT calculations.

Sophisticated Instruments for Accurate DATT Estimation

For precise DATT estimates, Network supervisors can employ these universally acknowledged instruments:

- CybernetScope : Renowned for its extensive prowess in network procedure inspection, CybernetScope can analyze and display data unit DATTs.

- EchoMapper : This progressive apparatus combines 'echo' and 'routetrack' functionalities into a visual and intuitive depiction.

- VirtuNet : VirtuNet offers DATTs together with an array of comprehensive network performance markers.

Ensuring Dependable DATT Outputs

To retain reliable DATT outputs:

- Frequent Verification : Conduct multiple inspections at varied intervals to cross-verify network inconsistencies.

- Review of Network Consumption : Schedule assessments during peak and off-peak traffic slots for a balanced evaluation.

- Dependable Methods : Use extensively acknowledged tools known for their efficacy in DATT calculation.

In the final analysis, a precise appraisal of DATT enables IT gurus to ensure maximum network output. Adequate probing techniques, recognition of misleading variables, and the application of tried-and-tested tools are critical determinants in ascertaining a network's top-tier performance.

Techniques for Reducing and Controlling RTT

Pursuing an optimal online journey? Managing and trimming down Round Trip Time (RTT) is a paramount consideration. In this context, we'll explore a multitude of methodologies designed to thresh out this objective.

Grasping the Importance of RTT Trimming

It's favorably beneficial to comprehend the vital underpinnings of why RTT reduction is of supreme importance. Mile-high RTT measurements may retard network competency, impinging activities from web surfing to E-sport challenges and media broadcasting online. By constraining RTT, we are able to exponentially boost the online experiences.

Approach 1: Refining Network Apparatus

Carving the inaugural step towards RTT attenuation involves refining network apparatus. This consolidation consists of assuring optimal performance of all network devices, comprising of routers and switches. Periodic fine-tuning and upgrades can support this pursuit.

Additionally, the spatial configuration of the network might influence the RTT. To illustrate, an overextended network covering a vast geographical region will inherently possess an escalated RTT compared to networks confining to a smaller area. Hence, orchestrating the network configuration with a focus on RTT minimization can aid its reduction.

Approach 2: Deploying Content Delivery Networks (CDNs)

Deploying Content Delivery Networks (CDNs) are formidable in curtailing RTT. CDNs function by cacheing a website's content on an assemblage of servers dispersed globally. If a user petitions to view a webpage, the content is expedited from the nearby server, effectively diminishing the RTT.

Approach 3: Leveraging TCP Window Scaling

TCP Window Scaling is a technique that can aid in trimming down RTT in networks with towering bandwidth-delay product (BDP). Expanding the TCP window size allows an increase in the amount of unconfirmed data that can be transmitted before an acknowledgment is required, thus curbing RTT.

Approach 4: Pathway Refinement

Pathway refinement entails the selection of the most beneficial route for data packets to traverse from source to destination. Opting for the shortest and least congested route can contribute to significant RTT reduction.

Approach 5: Protocol Streamlining

Disparate protocols bear different RTTs. Such as, TCP commonly has a higher RTT compared to UDP . Therefore, protocol selection can play a vital role in RTT reduction by choosing the apt protocol for the requirement.

Approach 6: Deploying Condensation Techniques

Utilizing condensation methods may assist in shrinking the volume of data transmitted, thus restricting RTT. It should be highlighted, however, that condensation can also enhance processing time, thus a careful cost-benefit analysis is essential.

Approach 7: Controlling Buffer Bloating

Buffer bloating is a scenario where superfluous buffering of data packets elevates latency and jitter, additionally decreasing overall network pace. Taming this phenomenon can help in curtailing RTT.

To wrap up, managing and constraining RTT embraces diverse strategies, ranging from refining network apparatus and activating CDNs, to streamlining communication protocols and controlling buffer bloating. By activating these approaches, we can potentially uplift the online experiences comprehensively.

The Impact of RTT on Video Streaming and VoIP Calls

In the digital world, our dependence on advanced tech tools, such as online video streaming and internet-based calls (VoIP), is tremendous. The effectiveness of these tools is directly reliant on robust and rapid internet connections. The network's Round Trip Time (RTT)- the time taken for data packets to travel from source to destination and back, is a significant determinant of their performance.

Insights on RTT's Influence on Online Video Streaming

Our everyday activities often involve the exchange of video data, whether we're binge-watching TV shows on Netflix, gaining knowledge from YouTube educational content, or engaging in live chats on Facebook. The quality and reliability of these platforms are significantly affected by RTT.

When a video begins to stream, data packets take a journey from the origin server to the viewer's gadget. The complete circuit followed by these packets, from their origin to destination and return, constitutes the RTT. A high RTT implies a greater delay in data packet delivery, causing irritations such as continuous buffering or lagging, which drastically compromises the viewing experience.

In contrast, a lower RTT means faster connectivity, contributing to an uninterrupted streaming journey. Therefore, ensuring a minimal RTT is key to top-notch digital entertainment experience.

The Bearing of RTT on VoIP Calls

Similarly, RTT largely impacts the quality of VoIP calls. In a VoIP call, verbal communications are converted from analog signals into digital data packets, which are then dispersed across the network.

The effectiveness of a VoIP call is strongly dictated by its RTT. A high RTT has the potential to cause substantial audio transmission delays, resulting in undesirable effects like echoes or overlapping speeches. Such disruptions hinder the natural flow of conversation and induce user dissatisfaction.

On the other hand, a smaller RTT ensures an audible and realistic voice quality, resembling conventional telephone call experiences. Hence, lower RTT is essential for flawless VoIP communication.

Drawing Parallels: Effects of RTT on Video Streaming and VoIP Calls

How to Reduce RTT for Optimal Video Streaming and VoIP Calls Experience

Various methods can be employed to curtail RTT and enrich your video streaming and VoIP call experiences:

- Choose a wired network : Generally, a wired (cable) connection yields lower RTTs than a wireless one.

- Upgrade your internet package : A superior bandwidth package can substantially reduce RTT.

- Use a server in close geographical reach : Selecting a server near your location often culminates in a smaller RTT.

- Utilize Quality of Service (QoS) tools : QoS tools can prioritize certain network activities, thereby reducing RTT for video streaming and VoIP calls.

In summary, RTT significantly affects the experience of video streaming and VoIP calls. Understanding this fact and implementing strategies to minimize it can dramatically enhance users' online communication experiences.

RTT in Wireless Networks: An In-depth Analysis

Wireless networks have become our invisible companions, silently fuelling our digital existence, be it at home, work, or cafes. Yet, they leave us in a lurch when poorly performing, often attributed to factors like Round Trip Time (RTT).

Decoding RTT in Wireless Setups

Simply put, RTT is the full circle time a data packet takes from origin to the end-point and back to the origin. It's a barometer of various influencing elements such as network density, distance between data source and recipient, radio wave disturbances, and signal power.

Imagine streaming your favorite movie in an overpopulated café with numerous devices jostling for Wi-Fi. The network density escalates, leading to higher RTT. Likewise, connecting to the Wi-Fi from your garden might lead to weakened signals due to distance, further escalating RTT.

RTT's Impression on Wireless Network Efficiency

RTT propels a domino effect on the quality of wireless productivity. Imbalanced RTT triggers sluggish data movement, souring the digital experience. Streaming or gaming with escalated RTT is the culprit behind intermittent buffering or lagging.

In contrast, regulated RTT is the assurance of brisk data movement, promising uninterrupted, pleasurable digital navigation.

The Puppeteers of RTT in Wireless Ecosystems

- Distance: A stretched distance between sender and receiver expands the data packet's journey, inflating RTT.

- Network Density: A jam-packed network, bustling with multiple users or data packets, clutters the gateway, amplifying RTT.

- Radio Wave Disturbances: Any electronic equipment interfering with the wireless signal can deviate the signal, leading to augmented RTT.

- Signal Power: A feeble signal intensifies RTT since the data packets frequently miss the reception, triggering retransmission.

Estimating RTT in Wireless Ecosystems

Tools like the "ping" are your allies to gauge RTT in wireless environments. It propels a data packet to a designated IP and records the round trip time.

For example, on a Windows system, activate the Command Prompt, input "ping www.google.com", and the outcome will register the RTT time stamp in milliseconds.

Diminishing RTT in Wireless Ecosystems

Here are some tactics that can help mitigate RTT in wireless setups:

- Router Placement: An ideally placed router, preferably central and obstruction-free, boosts the signal strength.

- Minimizing Network Jam: Scrimping on device connectivity can help ensure fluid network traffic and deflate RTT.

- Dual-Band Router Utilization: Such routers can shift between two frequencies, mitigating interference and thereby lowering RTT.

- Updating Network Assets: Integration of contemporary networking assets and technology can enhance network quality, pushing down the RTT.

To sum up, understanding and handling RTT can make or break your wireless network’s efficiency. Harnessing knowledge about RTT, its influencers, estimation techniques, and mitigation strategies can help you sculpt an optimized, high-performance wireless network.

RTT: A Critical Factor in Telecommunications

Gauging network efficiency in telecommunications is incumbent upon a key metric known as the Bidirectional Transit Duration (BTD). This component significantly modifies and shapes the system's output, a relationship we will explore alongside its interaction with distinctive communication models, and how adept BTD control plays a definitive role in yielding superior performance.

BTD: An Indispensable Parameter in Telecommunication Platforms

To decode telecommunication jargon, Bidirectional Transit Duration or BTD refers to the time taken for the successful journey of data from its genesis to the allocated endpoint and back. Its impact in telecommunication processes is immeasurable as its influence is directly proportional to the pace and efficiency of data dispersion. Hence, a compressed BTD duration implies an amplified data transmission speed which triggers an enhancement in the quality of communication.

BTD serves as an indispensable yardstick assessing the performance of the network within the foundations of telecommunication infrastructure. This enables the identification and timely mitigation of impending issues, thus optimizing network function to superior stages. For instance, an elevated BTD can be indicative of network overloading, obligating administrative intervention for apt adaptations.

Repercussion of BTD on Protocols for Communication

Numerous communication guidelines experience the profound impression of BTD. Consider the example of Communication Regulation Protocol (CRP). This protocol depends on BTD to determine the most flexible volume designated for data exchange. In this setting, the term 'volume' denotes the quantity of data that can be dispatched without the requirement for acknowledgement of receipt from the recipient's end. By modulating this volume in accordance with BTD parameters, CRP can facilitate uninterrupted exchange of data, thus averting any possible bottlenecks.

Tailoring BTD for Luxuriant Performance

Exemplary telecommunication output warrants proficient BTD control. This includes regular monitoring of BTD values, noting deviations, and initiating rectifying procedures.

- BTD Parameter Observation : Diurnal BTD observation can pinpoint potential network issues. Instruments like Axial Monitor and Navigation Tracker can be availed for this purpose.

- Deviation Monitoring : A remarkable shift from the typical BTD values can portend possible network issues. For instance, a sudden surge in BTD could be indicative of network bottlenecks or malfunctioning equipment.

- Adaptation Initiatives : Once the anomaly is detected , immediate curative steps need to be taken. Such actions may encompass rerouting of traffic channels, upgrading system hardware or modifying network settings.

To encapsulate, BTD has yielded itself indispensable, imposing a paramount influence in the realm of telecommunications, bearing a pronounced effect on its output and productivity. By maintaining a stringent measure on BTD, telecommunication service providers can ensure unrivalled service, consequently refining user experience.

How RTT Shaped the Internet: A Historical Perspective

RTT (Round Trip Time) has been instrumental in the transformation of the internet throughout the ages. A deeper study of RTT's influence on successive internet modifications aids our understanding of its comprehensive evolution.

RTT: The Catalyst for Advanced Network Functionality